Performance Analysis and Best Practices for Multi-threading and Multi-processing

In modern multi-core processor systems, choosing appropriate numbers of threads and processes is crucial for program performance. This article presents conclusions and insights based on experimental data analysis. The specific code can be obtained from Github.

System Environment

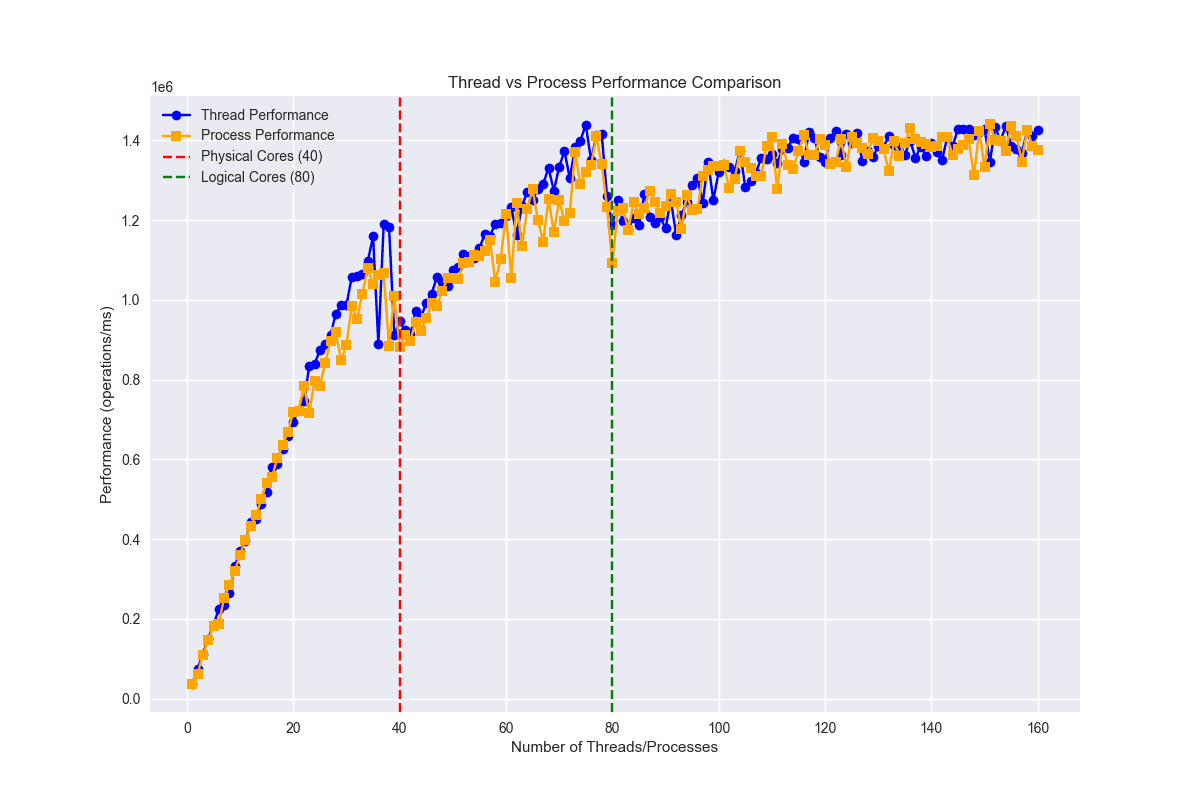

- Physical cores: 40

- Logical cores: 80

Performance Data Analysis

The following characteristics can be observed from the data:

- Linear Growth Phase: From 1-40 threads, performance increases almost linearly as each thread can exclusively occupy a physical core.

- Sub-linear Growth Phase: From 40-80 threads, performance continues to improve but at a slower rate, due to the use of hyper-threading technology.

- Performance Convergence Phase: Beyond 80 threads, performance fluctuates but generally maintains the same level.

- Thread and Process Performance Curves: The performance curves for threads and processes are essentially identical. Don't be misled by the term "hyper-threading" - the technology provides equal hardware support for both threads and processes, as explained later.

- Additional Note: Linear and sub-linear growth don't exactly reach their peak values at 40 and 80 because other tasks running in the system consume some resources. You can conduct your own tests under controlled conditions.

Why is Optimal Performance Achieved Near the Number of Logical Cores?

Hardware Resource Matching

- When the number of threads/processes equals the number of logical cores, computing resources are optimally utilized

- Each thread/process gets an execution context, avoiding excessive context switching

Impact of Hyper-threading Technology

- Physical cores simulate two logical cores through hyper-threading technology

- Hyper-threading can improve performance when resources aren't fully utilized

- This explains why performance continues to improve between 40-80 threads/processes

Resource Contention

- Exceeding the number of logical cores leads to more frequent context switching

- Increases scheduling overhead and resource contention

- This explains why performance fluctuates beyond 80

Relationship Between Hyper-threading Technology and Multi-processing

Logical Cores and Hyper-threading

In our system, 40 physical cores support 80 logical cores through Intel Hyper-Threading Technology (HT). Here's how hyper-threading works:

Hardware-level Implementation

- Each physical core contains two independent register sets (architectural states)

- Shares execution units, cache, and other computing resources of one physical core

- The operating system views these two register sets as independent logical cores

Resource Sharing Mechanism

- Instruction pipeline

- L1/L2 cache

- Execution units (ALU, FPU, etc.)

- Branch predictor

Why Can Multi-processing Also Utilize Hyper-threading?

OS-level Scheduling

- The OS scheduler doesn't differentiate between processes and threads for hardware resource allocation

- The scheduler treats both processes and threads as schedulable execution units

- At the hardware level, both processes and threads are mapped to logical cores for execution

Universality of Hyper-threading

- Hyper-threading is a hardware-level parallel technology

- It doesn't care whether the execution unit is a process or thread

- As long as there are two independent instruction streams, it can utilize the parallel capabilities provided by hyper-threading

Performance Improvement Principle

- When one process is waiting for memory or I/O, another process can use the idle execution resources

- Improves hardware utilization through resource sharing

- This explains why performance can still improve with process counts between 40-80

This also explains why in our tests, both multi-threading and multi-processing achieve optimal performance when approaching the number of logical cores (80). Hyper-threading technology provides equal hardware support for both parallel approaches.

Best Practice Recommendations

Thread Count Selection

- For CPU-intensive tasks, it's recommended to set the thread count equal to the number of logical cores

- For I/O-intensive tasks, you can increase the thread count moderately, but it's recommended not to exceed twice the number of logical cores

Process Count Selection

- Process count should also be set to the number of logical cores

- Given the higher overhead of process creation and management, be more cautious when increasing the number of processes